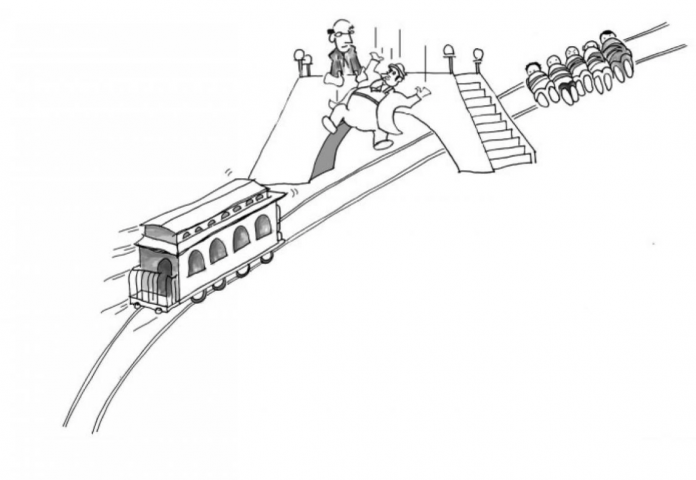

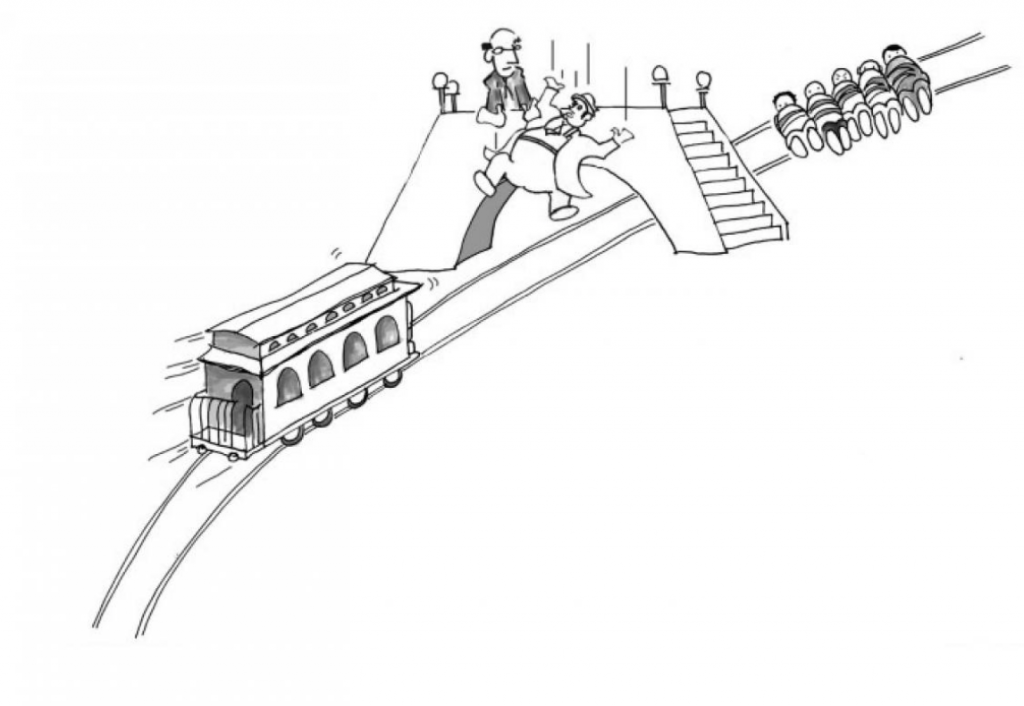

In a hypothetical dilemma, two options are presented: one, you, as the bystander, let a trolley kill five individuals, or two, you push—consequently killing—one man in front of the moving trolley in order to save five lives. In an alternative dilemma, you have the option to flip a switch and drop the man off the footbridge, effectively killing the one man and saving the five from a distance. Known as “trolleyology,” Joshua Greene explained impulsive decision making by way of this dilemma.

The trolley scenario was one of many examples that Professor Joshua Greene used to illustrate human morality on Thursday, March 14. Greene holds a Ph.D. in philosophy and is a professor of Psychology at Harvard University, specializing in moral-judgment and decision making within cognitive science.

“Human Morality: Features and Bugs” was a culmination of his work in experimental psychology, neuroscience, and philosophy. Greene imparted an overview of human morality, as well as “how our emerging scientific understanding of human morality can help us make better decisions.”

Greene addressed the moral problem of cooperation in which individual versus collective interests diverge, often referred to as the “Tragedy of the Commons.” Green regards such conflicts of interest as the “fundamental problem of social interactions.”

The solution to problems within cooperative efforts, in Greene’s belief: Morality.

The “social function” of morality is solving the problems of “me versus us” and “us versus them.”

Greene compartmentalizes human morality in terms of “automatic” and “manual” settings, likening the distinct forms of morality to camera modes. These features are useful in understanding how people tend to make decisions concerning morality.

“Human decision making has the same basic idea [as camera settings],” Greene said. “That is, we have intuitions. We have gut reactions, often emotional gut reactions that tell us what’s right and what’s wrong.” These intuitions are analogous to the automatic setting.

Humans’ manual setting, however, entails “explicit reasoning, thinking through problems,” as Greene imparted. “This is us saying, ‘well, this is the situation, this is my goal, and of all the options I have, which answer is going to get me to where I want to be?’”

When presented with the earlier-noted trolley dilemma, people are more likely to say that the sacrifice of one man’s life is worth saving five, in the case that the interaction is indirect (i.e. pulling a lever).

Interestingly, those who rely on manual decision making are more likely to sacrifice one man’s life for the sake of five, whereas those relying on more automatic, gut-driven decision making tend to want to protect the man on the footbridge.

Greene’s book “Moral Tribes” expounds on the value of the trolley dilemma in exploring morality: “When, and why, do the rights of the individual take precedence over the greater good? Every major moral issue—abortion, affirmative action, higher versus lower taxes, killing civilians in war, sending people to fight in war, rationing resources in healthcare, gun control, the death penalty—was in some way about the (real or alleged) rights of some individuals versus the (real or alleged) greater good. The Trolley Problem hit it right on the nose.”

As for the neurological component, morality “has no special place in the brain,” said Greene. While certain areas are active during human decision making, “all of these general brain processes are doing the same kinds of things but in different contexts.”

What is morality, if it serves no specific brain function?

It solves the problem of “me versus us.” Something is moral because of “what it does;” morality appears to be a social function.

A “bug” of morality is its relativity. Manifestations of morality are contingent on the cultures in which they arise. How are different cultures and social groups expected to get along?

Greene said, “meta-morality.” Doing what yields the “most human good, maximizing happiness, and minimizing suffering.”

Humans’ automatic setting does a reasonably good job in aiding morally good decisions. But Greene suggested that in order to yield a just society, “we will have to rely on our manual setting. We can’t just rely on our gut.”

People across cultures have different drives and beliefs. “Tribes,” Greene said, “have different institutions. They have different ideas of what’s right and what’s wrong on a gut level. And if everybody just trusted their gut reaction, then we’re not going to be able to have a universal solution. The manual setting allows us to step back from our tribal institutions and think in terms of the more abstract and universal. We can ask ourselves, ‘what would a just society look like?’” People must think more universal.

Greene expressed his hope that “by understanding our moral thinking—how it got here, where it comes from, and how different assets of our brain push us in certain directions—we can have a little bit of perspective, make better choices, and in our own way, realize our quest to be even more human than we already are.”